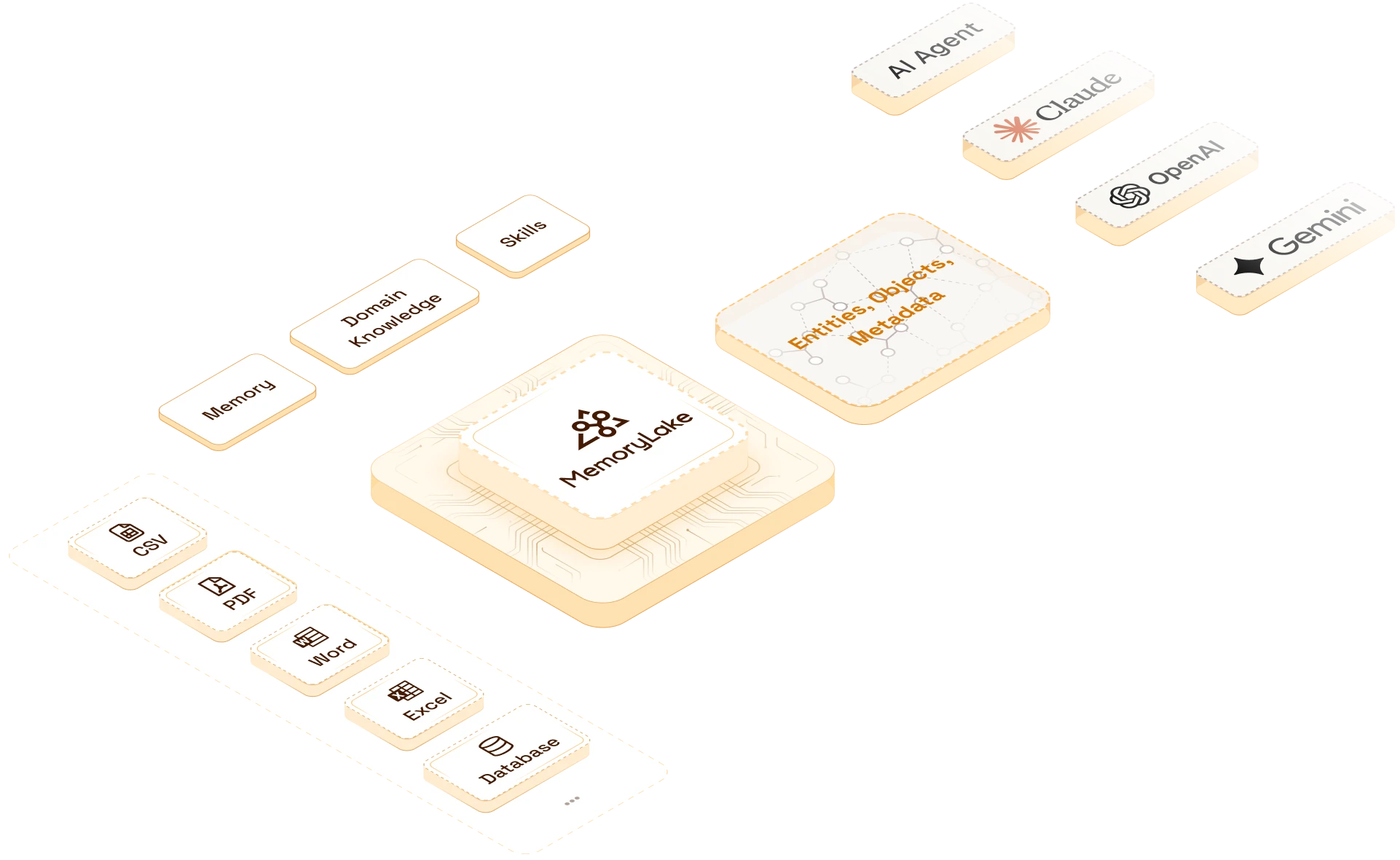

Distributed, Concise, Complete and Self-Evolving.

Powered by MemoryLake

Works with every model.

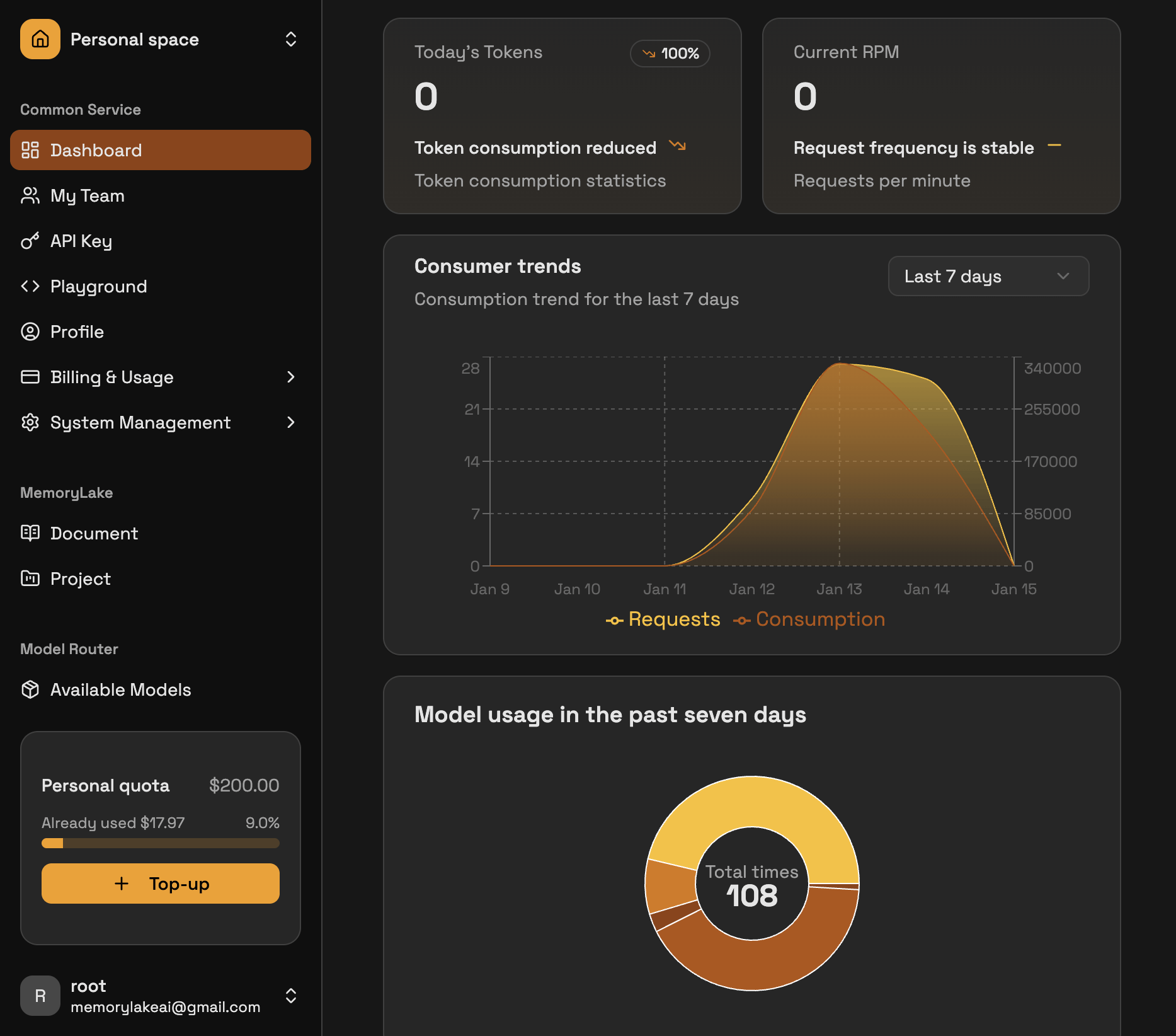

Faster. Better. Cheaper.

98%

Accuracy

Truly relevant answers

Beyond pure similarity, MemoryLake finds what actually matters.

Reasoning-powered search

It doesn’t just match, it thinks as it retrieves.

Token

Flexible output

From concise summaries to million-token deep dives, tailored to your LLM.

Cost Reduction

MemoryLake

LLMs

3×

One-time indexing —

pay once, reuse endlessly.

Save LLM tokens —

only the most relevant context is passed, no wasted tokens.

1000×

Capacity

Attached files (LLMs):

MemoryLake:

Attached files (LLMs):

limited to tens of files,

a few hundred MB.

MemoryLake:

scales to thousands of files, hundreds of TB.

Enterprise & Security

Enterprise-ready and built to scale

Deploy MemoryLake on your cloud, hybrid, or on-prem setup. Redundant storage, graph-based indexing, and sub-300 ms recall ensure your data stays fast, secure, and fully compliant.